Become a VM Ware certified professional VCP

In present time Virtualisation is the hottest topic in Information Technology arena. Very few players like MS Hiper-V, Citrix but VM Ware without any doubt enjoy the leader position. As VMware software began to take its place in the data center and demand respect in the industry, the need for a certification path became clear.

Saturday, June 30, 2012

How to Create a VMWare lab on your machine

Sunday, March 4, 2012

Virtual Machine

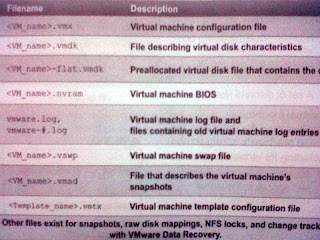

The table lists the files that make upa VM. Except for the log files, the name of each file starts with the VM's name

The table lists the files that make upa VM. Except for the log files, the name of each file starts with the VM's name - Configuration file (.vmx)

- One or more virtual disk files. The first virtual disk has files

.vmdk and -flat.vmdk. - A file contiaining the VM's BIOS (.nvram).

- A VM log file (.log) and a set of files used to archive old log entries (-#.log). Six of these files are maintained at any one time.

- A swap file (.vswp).

- A snapshot description file (.vmsd). This file is empty if the virtual machine has no snapshots.

If the virtual machine is converted to a template, a virtual machine template configuration file (.vmtx) replaces the virtual machine configuration file (.vmx).

If the virtual machine has more than on disk file, the file pair for the second disk file and later is named (VM_name>_#.vmdk and (VM_name>_#-flat.vmdk, where # is the next number in the sequence, starting with 1.

Six of the archive log files are maintained at any one time. e.g. -1.log to -6.log might exist at first. The next time an archive log file is created e.g. when the virtual machine is powered off and powered back on -2.log to -7.log are maintained (-1.log is deleted), then -3.log to -8.log and so on.

A VM can have other files e.g. if one or more snapshots were taken or if raw device mappings were added. A VM will have an additional lock file if it resides on an NFS datastore. A VM will have a change tracking file if it is backed up with the VMware data recovery appliance.

The configuration files of a VM can be displayed by going to Configuration > Datastore; right click datastore and browse. The another option to VM configuration files is by going to Configuration > Datastore > View and select 'Show All Virtual Mahine Files'

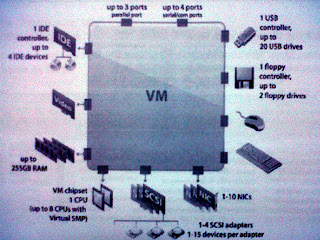

Virtual Machine Hardware

A VM uses virtual hardware. Each guest OS sees ordinary hardware devices. It does not know that these devices are virtual. All virtual machines have uniform hardware (except for a few variations that the system Administrator can apply). Uniform hardware makes virtual machines portable across VMware virtualization platforms. We can add and configure VM's CPU, Memory, HDD, CD/DVD, Floppy, SCSI devices etc. But we can't add video devices, but can configure existing video devices. A USB connected to physical host can be made available to one VM at a time. To make it available to another VM it should first be disconnected from existing VM.

CPU and Memory: VMware Virtual SMP allows you to take advantage of configuring a VM with upto 8 vCPU and Memory upto 255 gb.

Virtual Disk: The VM should have atleast one virtual disk. Adding the first virtual disk implicitly adds a virtual SCSI adapter for it to be connected. The ESX/ESXi host offers a choice of adapters: BusLogic Parallel, LSI Login Parallel, LSUI Logic SAS, and VMware Paravirtual. The VM creation wizrd in the vSphere client selects the type of virtual SCSI adapter, based on the choice of guest OS. By default Virtual disk is thick provisioned but this can be changed to thin-provisioned disk if needed. There is special disk mode call independent. The independent mode has two options: persistent and non-persistnet. If we want changes to disk written immediately and permanently then persistent is chosen. Non-persistnent option is chosen to discard changes when the VM is powered off or reverted to a snapshot. In most cases there is no need to change the disk mode for virtual disks.

vNetwork Interface Card: The network cards available for Virtual machine are Flexible (vlance & vmxnet), e100, vmxnet2 (enhanced vmxnet) and vmxnet3.

Other Devices: A VM can have CD/DVD drive or Floppy drive on the physical host. The CD-ROM, DVD drives either connect to physical drive or to an ISO file. We can also add generic SCSI devices, such as tape libraries to VM. These devices can be connected to the virtual SCSI adapters on a VM.

Virtual Machine Console: From console we can send power changes to a VM. The VM's guest OS can be accessed from it. The Ctr+Alt+Del is sent from console. If VM tolls are not installed then to release pointer VM's Console Ctr+Alt.

Saturday, March 3, 2012

Networking

- Connecting VMs to the phycial netork.

- Connecting VMkernel to physical network. VMkernel services include aces to IP storage, such as NFS or iSCSI, vMotion, access to the managment network on ESXi host.

- Providing networking for the service console for ESX only.

Separate IP stacks are configured for each VMkernel port and the ESXi management netork port . Each ESXi management network port and each VMkernel port must be configured with tis own IP address, netwmask and gateway. All three port groups connect to outside world through physical adaptors assigned to the vSwitch. We can place all networks on a single vSwitch or multiple vSwitches can be opted which depends upon the situation.

There are two types of vSwitches; vNetwork Standard switch and vNetwork distributed switch. The vNetwork standard switch is configured at host level. We can have maximum of 4088 vSwitch ports per standard switch and 4096 vSwitch ports per host. The vNetwork distribued switch is configured at vCenter. Its components are same as vNetwork Standard switch but it functions as a single virtual switch across all associated hosts. This allows virtual machines to maintain consistent network configuration as the migrate across multiple hosts.

The virtual switches will be discussed in more details in succeeding posts.

Monday, October 24, 2011

ESXi - Storage

RDM (Raw Device Mapping) : The VMs running on ESX/ESXi host, instead of storing VM data in virtual disk file, you can store the data directly on a raw LUN. Storing the data this way is useful if you are running applications in your VMs that must know the physical characteristics of the storage device. Mapping a raw LUN allows you to use existing SAN commands to manage storage for the disk. The RDM is used to map to the raw LUN. RDM is a special file in a VMFS datastore that acts as a proxy for a raw LUN. It maps a file in a VMFS data store to a raw volume. A VM then references the RDM, which in turn points to the raw volume holding the VM's data. The raw device mapping is recommended when a its is must that VM should interact with a real disk on the SAN. e.g. when you make disk array snapshots or when you have a large amount of data that you do not want to move onto a virtual disk. When there is requirement to use VM in cluster using MSCS then Raw Device Mapping is very important.

Virtual Machine File System (VMFS) : The datastore reconised by VMFS which is created by assigning unpartitioned disks space. Once it is assigned an ESXi host automatically discover VMFS volume. This datastore is a clustered file system that allows multiple physical servers to read/write to the same storage device simulataneously. The cluster of file system enables unique virtualization-based services, which include:

Live migration of running VMs across physical servers.

Automatic restart of a failed VM on another physical server.

Clustering of VM across different physical servers.

The VMFS allows IT organisations to greatly simply virtual machines provisioning by efficiently storing the entire machine state in a central location. VMFS sllows multiple ESX/ESXi hosts to concurrently access shared virtual machine storage. The size of a VMFS datastore can be increased dynamically while VMs residing on it are powered on. A VMFS datastore efficiently stores both large and small files belonging to a VM. A VMFS datastore can be configured to use an 8mb block size, to support large, virtual disk files up to 2 TB in size. A VMFS datastore uses sub-block addressing to make efficient use of storage for small files. VMFS provides block-level, distributed locking to ensure that the same virtual machine is not powered on by multiple servers at the same time. If a physical server fails, the on-disk lock for each virtual machine can be released so that virtual machines can be restarted on other physical servers. The VMFS can be deployed as locally attached storage, FCS SAN, iSCSI storage and these appear to VM as mounted SCSI device. The virtual disk hides the physical storage layer from the virtual machine's OS. For the OS on a VM, VMFS preserves the internal file system semantics. The semantics ensure correct application behavior and data integrity for applications running in VMs. To view existing ESXi VMware datastore, login in to host using VMware vSphere client, Click on configuration >> Storage. Click on any datastore whose detail is needed. It will show; Volume label (datastore..), Device, Capacity, Free, file system etc. To add another datastore, Click add storage from here, leave default option Disk/LUN select >> Click next which will bring to screen where you can un-assigned LUNs. Selected required storage and click next >> review layout and click next >> Give a suitable name to it click next >> here is the section where you can choose appropriate block size. The Virtual disk upto 256gb will require block size 1 mb, 512gb - 2mb, 1 TB - 4 mb, 2 TB - 8 mb. >> Click finish. To understand Block size in respect of disk size in more details please refer http://www.vmware.com/pdf/vi3_301_201_config_max.pdf . Though this pdf talks about host 3.1 and before but good to take a look.

LOCAL AND SHARED STORAGE

All VMware ESX and ESXi installables are installed at Local storage. Also it is ideal for small environments. When all VMs are located on local sotrage management become easier. The usage of shared storage is

>vMotion.

>fast central repository for virtual machine templates

>recovery of VMs on another host incase of a host failure.

>Clustering of VM across hosts.

>Allocate large amounts of storage.

IP Storage

The ESX & ESXi hosts support two types of IP storage; iSCSI and NAS. iSCSI is used to hold one or more VMFS datastores whereas NAS is user to hold one or more NFS datastores. Both are used to hold virutal machines, ISO images and templates. vMotion, HA, DRS etc. features are supported on these datastores. The ESX & ESXi hosts support upto 64 NFS datastores and iSCSI and NAS run over 10 Gbps which provides increased storage performance.

iSCSI Components

Let us take a scenario where an iSCSI SAN storage system has more than one LUNs and 2 Storage processors. The communication between host and storage happen over a TCP/IP network. The components for this scenario will be as below:

1. iSCSI Storage consists of, number of physical disks under number of LUNs and storage processors connecting to TCP/IP network.

2. TCP/IP Network

3. Physical servers with software or hardware iSCSI initiators (HBA) connecting to TCP/IP network.

An iSCSI initiator trasmits SCSI commands over the IP network. A target receives SCSI commands from the IP network. You can have multiple initiators and targets in your iSCSI network. iSCSI is SAN oriented because the initator finds one or more targets, a target presents LUNs to the initiator and the initiator sends it SCSI commands. An initiator resides in the ESX/ESXI host. LUN masking is also available in iSCSI and works as it does in Fibre Channel. Ethernet switches do not implement zoning like Fibre Channel switches. Instead you can use VLANs to create zones.

Let us discuss iSCSI initiators; Software & hardware. The Software iSCSI initiator is VMware code built in to the vKernal. It allows host to connect to the iSCSI storage device through standard network adapters. The software iSCSI initiator handles iSCSI processing while communicating with the network adapter. With the software iSCSI initiator you can use iSCSI technology without purchasing specialised hardware.

Hardware iSCSI - A hardware iSCSI initiator is a specialised thierd-party adapter capable of accessing iSCSI storage over TCP/IP. Hardware iSCSI initiators are divided further into two categories: dependent and independent hardware. A dependent hardware iSCSI initiator or adapter depends on VMware networking and on iSCSI configuration and management iterfaces provided by VMware. This type of adapter, such as a broadcom 5709 NIC presents a standard network adaptor and iSCSI off-load functionality for the same port. To make this type of adapter functional, one must setup networking for the iSCSI traffic and bind the adapter and an appropriate vKernel iSCSI port.

An independent hardware iSCSI adapter handles all iSCSI and network processing and management for the host. The QLogic 4062C is an example of an independent hardware iSCSI.

Thursday, September 15, 2011

Install and Configure VM ware ESXi 4.1 host

Let's now proceed with the installation. We are considering Dell server which meets all pre-rquisits, hardware requirements etc. and we are installing from a DVD. To know more about hardware requirements please visit below link: http://kb.vmware.com/kb/1022101

Installation

First thing is to configure root password. It is important to configure root password to be able to login to vSphere client.

-Configure IP Address, Subnet mask, Gateway

-Configure DNS servers.

Once above configuration is done, press F2 to exit the DCUI and F12 to reboot your host. Make sure you add a host record to your DNS server for this ESXi host. Now you are ready to create virtual machines on your ESXi 4.1 host. Open browser on any machine in the same subnet in which you have your host. Type the host name or IP address of your ESXi 4.1 host and you will be presented with below screen:

Click Download vSphere Client, once download completes continue with the istallation. Once installation is complete launch vSphere client from Start > All programmes >> enter the IP address or Host Name of your ESXi 4.1 host >> user name - root and password.

You are now in virtualisation world, explore all settings and my next post will be about creating virtual machines.

Monday, September 5, 2011

What is Virtualisation

HOST BASED VIRTUALISATION

It requires an Operating System to be installed on a computer. For example a computer which is running Windows 7/xp or any linux Operating system, VMWare server or workstation Virtualisation layer is installed on it. This is called host based Virtualisation. Once VM ware server/workstations version is installed, virtual machines can be created and deployed. This virutal machines will have their own Operating Systems and set of Applications. Though this is good solution in testing environment and production environment to certain extent but this might not be an idle solution for production environment. There are few drawbackups e.g. rebooting hosting OS means all virtual machines will be rebooted too and many more.

VIRTUALISATION USING A BARE-METAL HYPERVISOR

A Bare-metal hypervisor system does not require an operating system. The Hypervisor itself is an Operating System. It installs the virutalisation layer directly on a clean x86-based system. Because a bare-metal hypervisor architecture has direct access to the hardware resources, rather than going through the Operating System which make it more efficient than hosted architecture. This delivers greater scalability, robustness and performance. A hypervisor is the primary component of virtualisation that enables basic computer system partitioning i.e. simple partitioning of CPU, memory and I/O. VMware ESX/ESXi employs a bare-metal hypervisor architecture on certified hardware for datacenter-class performance.

WHAT IS A VIRTUAL MACHINE

From a user's perspective, a virtual machine is a software platform that like a physical computer runs an operating system and applications.

From Hypervisor's perspective, a virtual machine is a discrete set of files. The main files are as below;

- Configuration file

-Virtual disk file

- NVRAM settings file

-Log file

WHY VIRTUALISATION

Virtual machines have many advantages over physical machines.

Virtual machines are easy to move and copy across different hosts.

Saturday, July 30, 2011

VMWare Basic things to start with